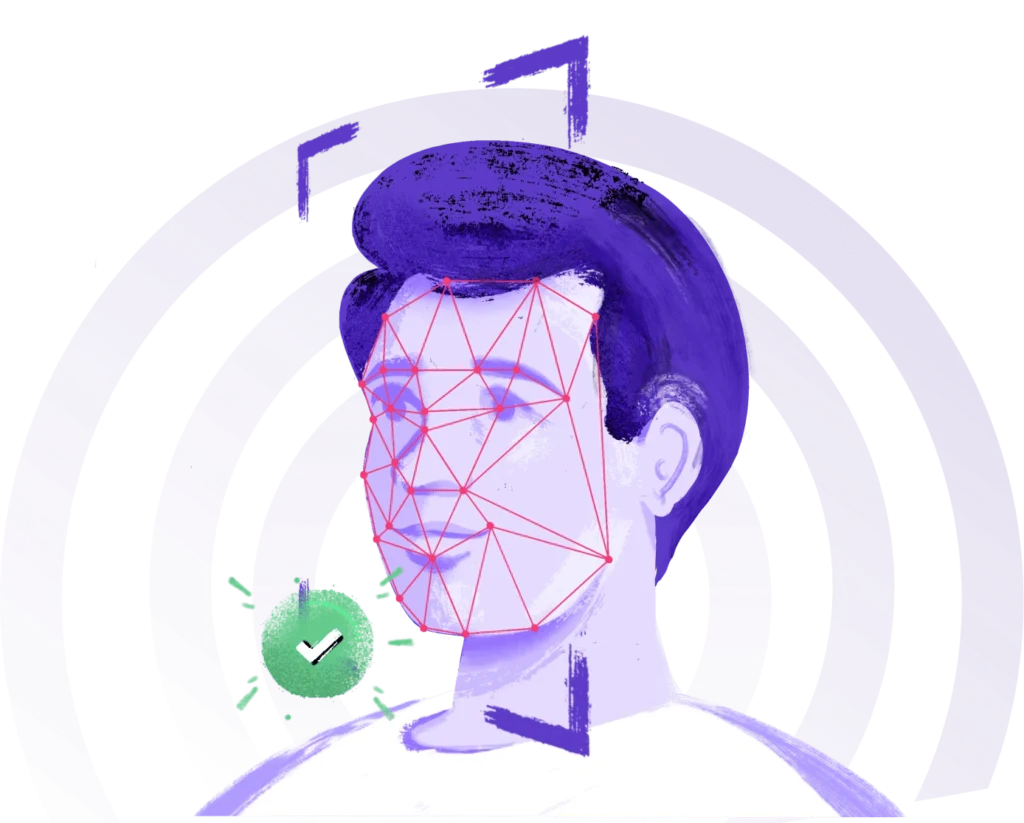

There is a lot going on in the AI facial recognition technology space. There are immersive discussions on its captivating power while critics point fingers at recognition bias. But, is there such a thing as AI-induced bias? Research suggests so.

According to an MIT research paper, facial analysis software shows an error rate of 0.8% for light-skinned men and 34.7% for dark-skinned women. Insane, right?

In this blog, we will discuss three important ideas–how biases occur, how to mitigate them, and how HyperVerge combats biases in the facial recognition technology landscape.

What is AI-induced bias?

AI-induced bias occurs when artificial intelligence systems produce unfair or prejudiced outcomes, often reflecting the biases present in the data they were trained on or the design of the algorithms themselves.

This can lead to skewed decisions in critical areas like hiring, fintech identity verification, law enforcement, and healthcare, disproportionately affecting certain groups. It also negatively impacts the ROI of organizations investing in these AI systems to improve experience using KYC.

The first study to report bias in facial recognition was in 2003 when the algorithm found it more challenging to identify females than males. A more recent example of algorithmic bias is from Amazon’s automated hiring tool that discriminated against women applying for technical jobs.

Let’s quickly understand the reasoning behind this.

Sources of facial recognition bias

Bias can occur due to numerous reasons. It can be due to technology, poor data, or human mistakes.

Here’s a list covering the most common sources of facial recognition bias:

- Demographic bias

Demographic bias occurs when the facial recognition system performs poorly for certain demographic groups such as women or people with darker skin tones. This happens due to the underrepresentation in the training data set causing the system to inaccurately identify these groups.

- Limited or outdated algorithms and technology

Algorithms built on outdated technology are not equipped to handle the complexities and diversity of human facial features. As a result, identity verification solutions fail to recognize skin textures and facial expressions, especially in underrepresented groups.

- Human bias in data and design

Human decisions can sometimes lead to bias. For example, if developers prefer lighter skin tones during testing, the system results may also be skewed toward those characteristics.

- Training data limitations

Facial recognition models rely heavily on data. If the data lacks diversity, the system will struggle to generalize across different demographics. Financial institutions especially must pay closer attention to this type of limitation as they deal with people from diverse backgrounds and facial recognition is a part of their KYC process guide

- Contextual misinterpretation

Sometimes AI throws an error when it fails to interpret the facial expressions or contextual cues like different lighting setups and camera angles. These factors disproportionately affect groups, further increasing bias.

The importance of effectively measuring and evaluating bias

Knowing the source of bias is only the starting point. When you understand how the bias challenges your business and the different methods to measure bias effectively, you can take final, decisive steps to mitigate it.

- Challenges and issues of facial recognition bias

There are three areas where facial recognition bias poses challenges for your business:

- Misidentification risks: This is more common in minority groups due to demographic biases. This could have serious repercussions in the legal and law enforcement contexts. For example, misidentification could lead to the rejection of a loan application based on a video KYC of an individual from a minority community.

- Ethical and social implications: Biased facial recognition systems could give a push to existing social biases inadvertently. This could have serious implications in sectors like employment where the system might indulge in racial profiling.

- Trust and reliability: The success of face recognition depends on the level of trust people have in its effectiveness. If the technology displays demographic or racial bias, it can impact public trust and also result in rejection of the technology.

- Key methods of measuring bias in facial recognition technology

These four methods are key to measuring bias in facial recognition technology:

- False Positive Rates (FPR): This method measures how frequently the facial recognition software fails to identify an individual as a match. A higher FPR rate for certain groups would indicate a bias towards individuals from this group. The Metropolitan Police used this technique to analyze the effectiveness of the facial recognition technology employed and found out 85% of the matches were false positives.

- False Negative Rates (FNR): This method calculates how often the system fails to identify a match correctly. The difference between FNR and FPR is that FNR calculates the proportion of actual negatives that are incorrectly identified as positives. In the case of the FPR, it is the other way around.

- Comparative error rates: The performance of the face recognition systems is evaluated by comparing its analysis across different demographic groups, races, genders, and ages. The error rate for each group is then compared to find out if the system exhibits bias towards a specific group.

- Diverse dataset analysis: This method uses a dataset consisting of a wide range of demographic representations. It proves how well-equipped the system is to perform across a diverse dataset.

Strategies for mitigating racial bias in facial recognition

Mitigating facial recognition bias needs vigorous planning and actionable steps. We have rounded up the following five strategies that you may consider in your plan:

1. Enhancing algorithm accuracy

Mitigating bias will require resorting to advanced techniques like multi-scale feature fusion and spatial attention mechanisms.

Multi-scale feature vision extracts the images at different scales and resolutions and combines them to give more contextual information to the algorithm. On the other hand, spatial attention, used within neural networks helps improve the focus on specific parts of the images. For example, concentrating more on the eyes and faces of the individuals and ignoring the background noise during digital identity verification KYC.

2. Applying the blind taste test concept

In the blind taste concept, the AI evaluator has no idea about the demographics of the individuals in the images. The advantage of this technique is you get a transparent analysis of the system’s accuracy and it minimizes unconscious bias.

| Did you know? Companies like Google are known to use a blind hiring process to remove bias. It removes all the information identifying an applicant from their resume to have a more diverse pool. |

3. Rigorous data analysis and improvements

The facial recognition engine runs on datasets. So unless you’re following a rigorous schedule for data analysis, you’re missing out on the numerous areas of improvement.

By using techniques like data augmentation, which alters images with rotations and brightness adjustments, you can create more refined datasets. HyperVerge’s AI-powered face recognition systems have demonstrated accuracy rates exceeding 95%!

4. Enhancing algorithm quality

Continued improvements in algorithm development are the key to creating a high-quality facial recognition system. There are various ways to improve the algorithm; you can start with fixing the demographic imbalances in the datasets for instance. Eliminating duplicates is also an effective way to enhance the quality.

You can also strengthen your algorithm to face real-world challenges by exposing it to lighting variations and occlusions. Banks and other finance companies that use third-party identity verification solutions through KYC integration must regularly follow up with their vendor to stay updated on the algorithm improvement.

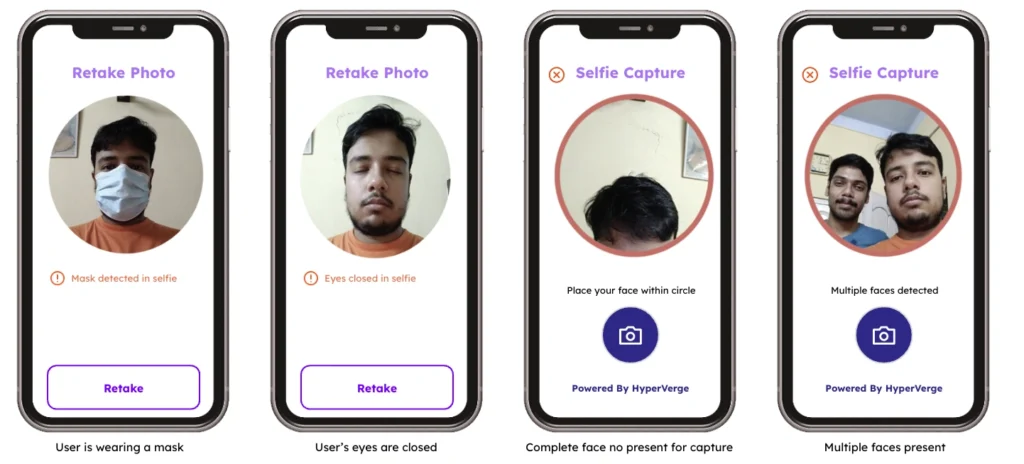

5. Importance of liveness checks and biometrics

Liveness checks confirm that an individual is sitting right at the front at the time of recognition. It also prevents the systems from being easily fooled by photos or masks. Biometrics makes facial recognition technology go beyond facial features through the usage of voice or gait recognition to perform a more comprehensive identification process.

HyperVerge’s technology is designed to eliminate inaccuracies and create equitable digital experiences.

Partner with us for fair, fraud-free verification. Schedule a DemoRegulatory and ethical considerations

Organizations must function within the periphery of law and guidelines. This section highlights some of the steps companies must take to ensure more transparency and fairness in the processes –

- Importance of compliance with legal standards

The regulations surrounding AI and the usage of facial recognition technology are still evolving. However, companies need to be at the forefront to understand existing laws and guidelines and avoid legal repercussions.

In the US, different states have started passing regulations to advocate the responsible use of recognition technologies. For example, Illinois’ Biometric Information Privacy Act (BIPA) mandates companies to obtain consent before collecting biometric data and requires them to follow strict storage practices.

- Ethical guidelines for AI development

Setting ethical guidelines acts as a benchmark for internal processes and ensures fairness. Here are a few principles to follow in ethical practices:

- Transparency: Maintain transparency from day one. Be open about how your systems work and how you include training data.

- Accountability: AI-induced bias could result in unfair treatment of certain groups. As a company, shying away from it or attempting to obfuscate it could tarnish your reputation. The right approach to this would be to take responsibility and corrective action to address the issues.

- Privacy protection: Give the highest priority to protect an individual’s data. Implement systems and techniques to ensure personal information is not misused.

- Advocacy for inclusive AI practices

To make AI inclusive and reduce bias, start by taking planned steps. Stress on diverse data collection from various demographic groups, races, genders, and ages. In case the algorithm displays bias, engage with the affected community, seek their input, and try to understand their concerns.

Focus on building partnerships with other tech companies and research institutes to build more comprehensive research on bias. At the highest level, you could lobby for laws that protect individuals from discriminatory practices associated with facial recognition technology.

Future directions in facial recognition technology

With rampant developments in this area, the future of facial recognition technology looks brighter than ever:

- Innovations in bias mitigation techniques

Adversarial training is one of the latest innovations to cut down bias in facial recognition. It trains the models on datasets that include both biased and unbiased samples to learn how to recognize and correct biases in real time.

Developers are adopting the XAI technique (Explainable Artificial Intelligence) to improve transparency. XAI explains how an algorithm decides such as why a particular face was not identified as a match. These insights in AI decision-making build more confidence and trust in the identification processes.

- The role of public policy in shaping AI development

Policymakers will play an extremely important role in shaping the future of algorithm development. The work towards implementing strict regulations to avoid the misuse of facial recognition technology has already begun.

For example, California’s SB 1186, mandates that law enforcement agencies must report their use of facial recognition technology and the outcomes of such use. As public awareness grows regarding the implications of AI, such policies will gain more momentum.

- Collaboration between technology companies and civil society

Civil societies can bring more insights to companies regarding community concerns like privacy, security, and bias. Such collaborations will be useful to implement industry-wide standards for ethical practices.

Partnership on AI (PAI) is one fitting example of such a collaboration. It is a non-profit organization consisting of technology leaders and civil societies to build the future of AI. One of the notable works of PAI is responsible practices for synthetic media. It is a framework supported by companies like Meta, Google, and Amazon that highlights how to develop, create, and share audiovisual content generated or modified by AI responsibly.

It will also bring the company closer to public concerns, consider diverse perspectives, and build an inclusive technological landscape.

How is HyperVerge helping mitigate facial recognition bias?

HyperVerge stands at the forefront of ensuring bias-free AI, offering facial recognition solutions that are rigorously trained on diverse global datasets. Its customer identification program minimizes AI-driven biases, ensuring that its technology is both fair and accurate.

HyperVerge is also a trusted partner for financial institutions for KYC automation as it takes care of the entire identity verification and onboarding process from start to end.

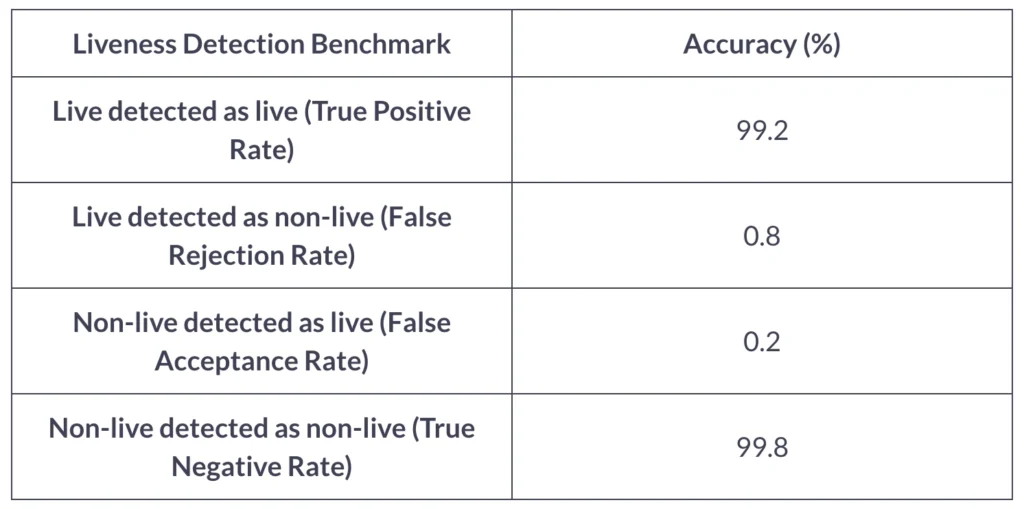

With prestigious global certifications from NIST and iBeta, HyperVerge’s commitment to excellence in facial recognition is evident.

Don’t take our word for it, here are the accuracy benchmarks we boast:

For those keen on adopting facial recognition technology that upholds the highest standards of fairness and accuracy, Hyperverge’s face authentication is an ideal choice.

You can request a demo to experience the capabilities firsthand.

FAQs

1. How to reduce bias in facial recognition?

Here are the top strategies to reduce bias:

- Advanced techniques like multi-scale feature fusion and spatial attention mechanisms to improve accuracy

- Data augmentation to create more refined sets and maintain a schedule to audit the data regularly

- Use advanced ideas like biometrics and liveness checks to ensure the subject’s presence during analysis

2. How do you protect against facial recognition?

Optimize the privacy settings on your device and social media account and be careful when you post images online. Consider using a tool that modifies the posts to disrupt the facial recognition technology.

3. How do you mitigate algorithmic bias?

Use diverse representations of datasets, regularly conduct data audits, and implement adversarial training to expose the models to both biased and unbiased data. HyperVerge’s advanced identity and face recognition models can also help you override algorithmic bias

4. How do you defy facial recognition?

Facial recognition can be bypassed using techniques like deepfake masks, high-quality photos, T-shirts with patterns, or infrared-blocking glasses that disrupt scanning.