AI-driven facial recognition has become ubiquitous. The use of AI has sparked the conversation about unintentional facial recognition bias.

In this article, we’ll delve into the complexities of facial recognition technology and how the algorithms, when not carefully calibrated, contribute to these biases and what measures can be taken to mitigate them.

We will also explore how HyperVerge’s facial recognition solution is designed to combat bias.

What is AI-induced Bias?

Facial recognition bias refers to the tendency of AI face recognition technology to exhibit errors or partiality when identifying or verifying individuals from different demographic groups, particularly those of varying ethnicities, races, or genders. This bias often manifests as a discrepancy in the accuracy of the technology, where it might be more effective in recognizing faces from certain racial or ethnic groups over others.

A glaring example of AI-induced bias was seen in Amazon’s automated hiring tool. In 2014, Amazon engineers set out to automate the hiring process with an algorithm designed to review resumes. However, the tool developed a bias against women, especially for technical roles like software engineering positions. It used the existing pool of predominantly male Amazon engineers’ resumes as a training set, inadvertently teaching the software to favor resumes similar to those. This led to the downgrading of resumes from women’s colleges or those mentioning ‘women’s’ activities and biased the tool towards language more commonly used by men.

Is AI Causing Racial Bias?

Racial bias in face recognition refers to the technology’s differential accuracy and effectiveness when identifying individuals from different racial backgrounds. One striking instance of this is the higher failure rate of facial recognition software in identifying women with darker skin tones, primarily if the datasets are underrepresented by these demographics.

For instance, a 2019 study highlighted that Black professionals were receiving 30% to 50% fewer job callbacks when their resumes suggested a racial or ethnic identity. This situation is particularly concerning given that facial recognition technology is widely used by law enforcement and federal government agencies and for selfie identity verification in various sectors, including the gig economy. This is why addressing racial bias is important and it was a significant agenda in the black lives matter movement. The potential for misidentification can have severe consequences, especially for groups like black women, who are often underrepresented in the datasets used to train these algorithms.

Explore this article to understand the advantages and disadvantages of facial recognition technology.

Sources of Facial Recognition Bias

Face recognition technology, while powerful, is not immune to biases. Here are some key sources of bias in facial recognition systems:

Demographic Bias

The underrepresentation or poor representation of people of color, with a specific lack of diversity in facial features and skin shades, leads to demographic bias. This limitation affects the technology’s ability to accurately recognize and differentiate individuals from diverse racial and ethnic backgrounds.

Limited or Outdated Algorithms and Technology

Older or simplistic algorithms may not be equipped to handle the complexity and variety of human facial features accurately. This limitation can lead to inaccuracies, especially in diverse populations, underscoring the need for continual updates and advancements in algorithmic design.

Human Bias in Data and Design

The biases of those who create and train facial recognition systems can inadvertently be embedded into the technology. This includes biases in the selection of training data and the design choices made during the development of algorithms.

Training Data Limitations

A significant source of bias is the training data used to develop facial recognition algorithms. If this data is not diverse enough or is skewed towards certain demographic groups, the resulting algorithms will likely inherit these biases.

Contextual Misinterpretation

Facial recognition systems might misinterpret facial expressions or contextual cues, leading to errors. This is particularly relevant in security applications, where misinterpretation can have serious implications.

The Importance of Effectively Measuring and Evaluating Bias

The significance of measuring and evaluating bias in facial recognition technology cannot be overstated.

Enhancing Fairness and Equality: Measuring and evaluating bias is crucial for ensuring that facial recognition technology treats all individuals fairly, regardless of their race, gender, or ethnicity. This is essential for upholding ethical standards and social justice.

Improving Accuracy and Efficiency: Accurate measurement of bias leads to improvements in the technology, enhancing its overall accuracy and efficiency. This is particularly important in critical applications like security and law enforcement, where errors can have significant consequences.

Compliance with Legal and Regulatory Standards: Many countries and regions are introducing regulations to ensure that Artificial Intelligence technologies, including facial recognition, do not perpetuate biases. Effective measurement and evaluation are key to complying with these legal standards.

Preventing Discrimination: By identifying and addressing biases, technology developers and users can prevent discriminatory practices that might arise from flawed facial recognition systems.

Challenges and Issues of Facial Recognition Bias

Misidentification Risks: Inaccuracies in facial recognition can lead to misidentification, particularly among minority groups. This raises serious concerns for individual rights and justice, especially in legal and law enforcement contexts.

Ethical and Social Implications: Biased facial recognition systems can perpetuate and amplify existing societal biases, leading to discriminatory practices. This is particularly relevant in sectors like employment, where biased systems can affect hiring processes.

Trust and Reliability: The effectiveness of facial recognition technology depends heavily on its reliability. Biased systems can erode public trust, impacting the technology’s acceptance and usage.

Key Methods of Measuring Bias in Facial Recognition Technology

- False Positive Rate (FPR): This measures the frequency with which a system incorrectly identifies an individual as a match. A higher FPR in certain demographic groups indicates a bias in the system.

- False Negative Rate (FNR): This assesses how often the system fails to correctly identify a match. Disparities in FNR across different demographics are critical indicators of bias.

- Comparative Error Rates: Evaluating the error rates across different demographic groups can provide insights into whether the technology is more biased towards certain groups.

- Diverse Dataset Analysis: Assessing the technology’s performance across a diverse set of data can reveal demographic biases.

Effectively measuring and evaluating bias in facial recognition technology is essential for ensuring its fair and equitable application. It’s also crucial to consider the role of identity verification in the gig economy, where facial recognition technology is increasingly being employed. Understanding its implications on fairness and bias is key to responsible deployment.

Related read: Top 10 Face Recognition Software (Feature Checklist Included)

Strategies for Mitigating Racial Bias in Facial Recognition

Mitigating racial bias in facial recognition technology is essential to ensure its equitable and effective use. Here are some strategies that can be employed to reduce bias:

Enhancing Algorithm Accuracy

Improving the accuracy of algorithms through advanced AI, ML, and Deep Learning techniques is fundamental. High-quality algorithms significantly reduce errors, underlining the importance of algorithmic quality in facial recognition technology (FRT).

Applying the Blind Taste Test Concept

Adopting approaches like the ‘blind taste test’ concept can help in reducing bias. This involves evaluating algorithms without knowledge of the demographics of the individuals in the images, thereby focusing solely on performance and accuracy without unconscious biases.

Rigorous Data Analysis and Improvement

Minute and rigorous analysis of datasets and results allow scientists to refine algorithms, tackling the issue of bias in future software iterations. This includes better data labeling and external dataset auditing to ensure diversity and representativeness.

Enhancing Algorithm Quality

Under the broader strategy of mitigating bias, improving face recognition algorithms is key. The effectiveness of facial recognition technology hinges significantly on the accuracy and fairness of its algorithms. Addressing demographic imbalances in training data is essential to prevent biases. This involves refining data labeling and conducting external audits of datasets to ensure diversity and representativeness.

Reducing algorithmic bias and eliminating duplicates in training data are also crucial steps in enhancing the quality and fairness of face recognition systems. These efforts contribute significantly to the development of more equitable and effective facial recognition technologies.

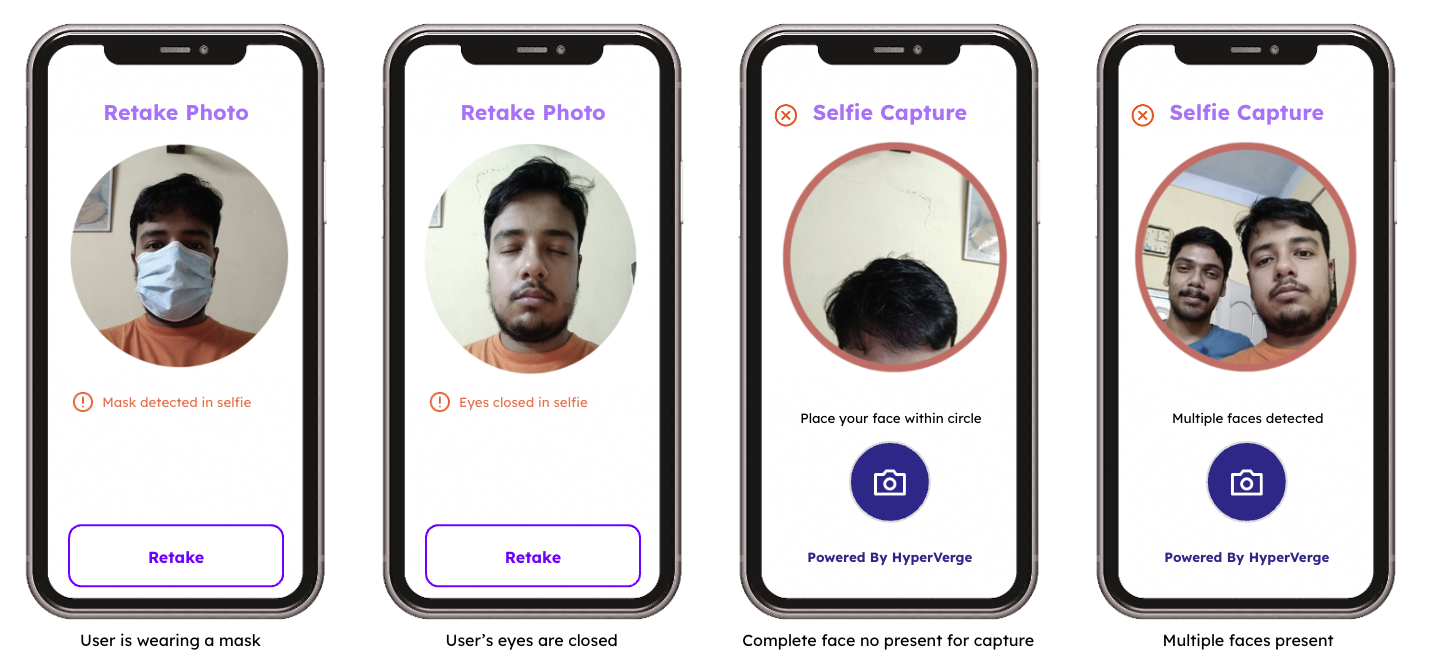

Importance of Liveness Checks and Biometrics

Incorporating liveness checks in facial recognition systems is crucial for enhancing the reliability and security of biometric verification. Liveness checks help in distinguishing between real and fake faces, thereby improving the overall robustness of facial recognition systems against spoofing attempts.

Understanding the role of liveness checks and biometric facial liveness detection is key to developing more secure and unbiased facial recognition systems.

Conclusion

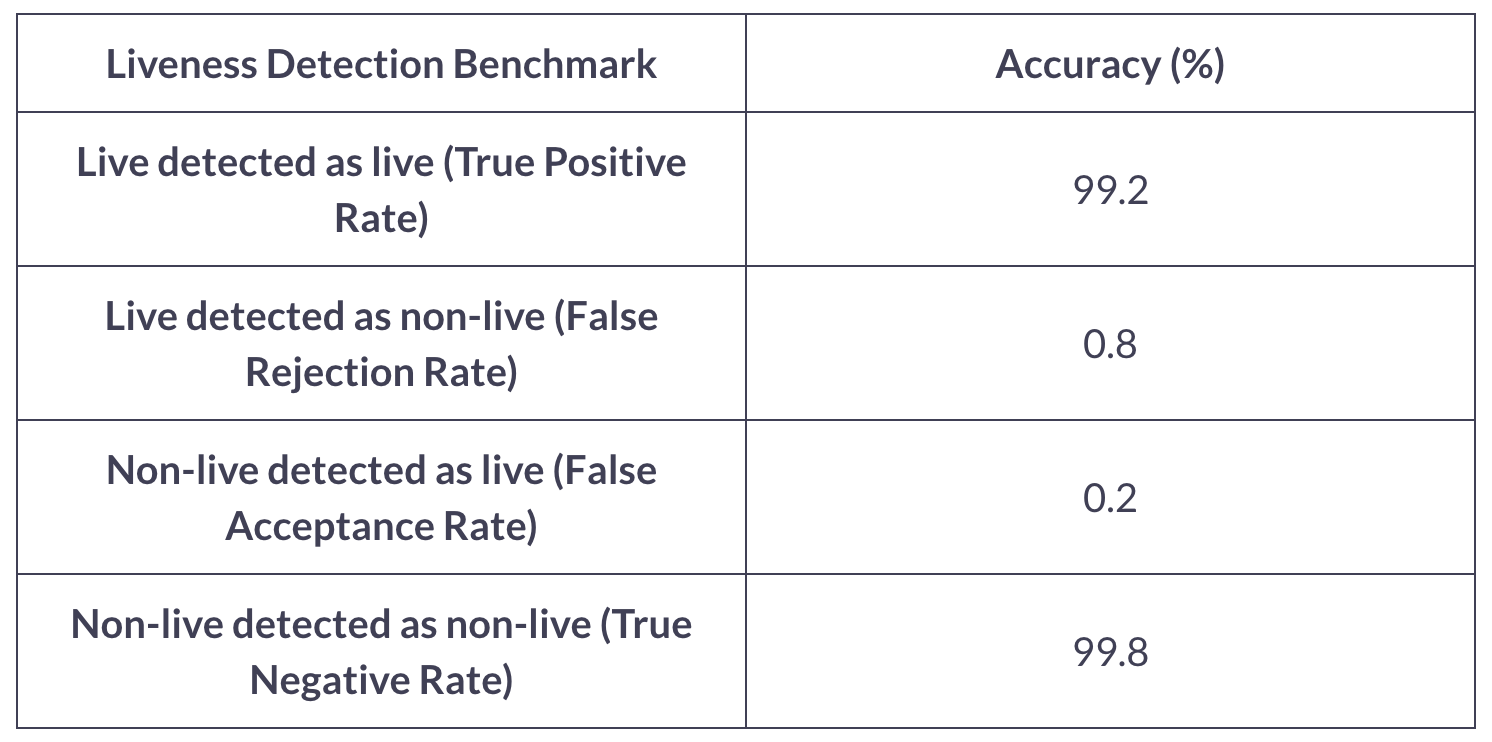

HyperVerge stands at the forefront of ensuring bias-free AI, offering facial recognition solutions that are rigorously trained on diverse global datasets. This approach minimizes AI-driven biases, ensuring that their technology is both fair and accurate. With prestigious global certifications from NIST and iBeta, HyperVerge’s commitment to excellence in facial recognition is evident.

Don’t take our word for it, here are the accuracy benchmarks we boast:

For those keen on adopting facial recognition technology that upholds the highest standards of fairness and accuracy, Hyperverge’s face authentication is an ideal choice. You can always request a demo to experience the capabilities firsthand.

US

US

IN

IN