Our team at HyperVerge, Inc ranked 4th at the DocVQA challenge hosted at CVPR 2020’s Workshop on Text and Documents in the Deep Learning Era. Anisha Gunjal summarises our approach and learnings.

Originally posted here. Code for training is available here.

Introduction to the dataset

Document Visual Question Answering (DocVQA) is a novel dataset for Visual Question Answering on Document Images. What makes this dataset unique as compared to other VQA tasks is that it requires modeling of text as well as complex layout structures of documents to be able to successfully answer the questions. For comprehensive information about the dataset, refer this paper.

Approach

Our approach draws inspiration from the recent breakthrough of language modeling in various Natural Language Processing tasks such as Text classification, Sentiment Analysis, Question Answering, etc.

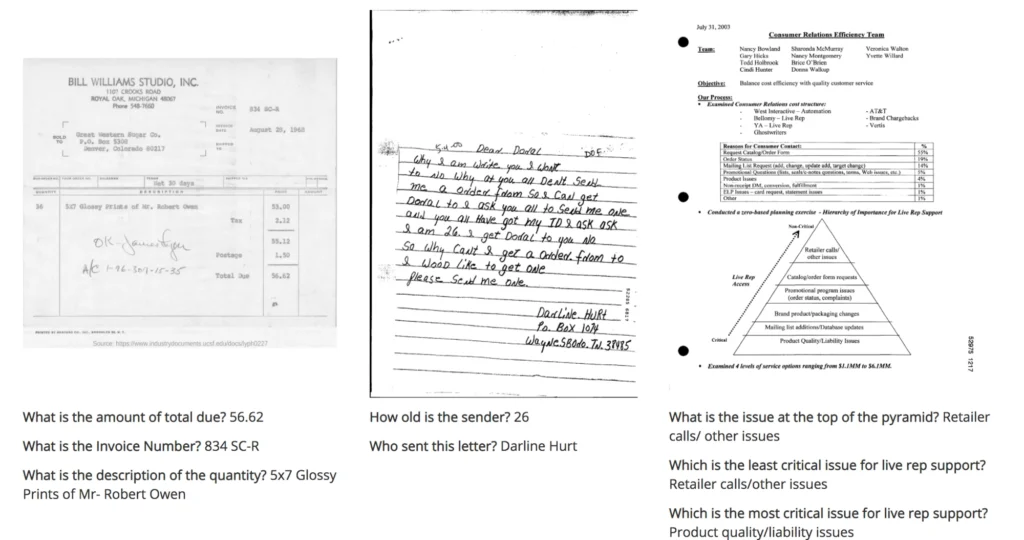

Although the existing models in NLP are useful to cater to the “text” aspect of this problem, it does not take any advantage of the available information related to the position of text in the document. To illustrate this let’s look at an example in Fig. 2. The posed question, “What is the issue at the top of the pyramid?” cannot be handled by text information alone and needs more intricate modeling which captures the structure of the document.

The highlighted problem is addressed by LayoutLM which utilizes the text and layout information present in scanned documents for pretraining through self-supervision. The LayoutLM architecture is exactly the same as BERT’s architecture, however, it incorporates additional 2D Position Embeddings and Image Embeddings along with the Text Embeddings. The LayoutLM model is pre-trained on document images using novel training objectives such as Masked Visual-Language Model and Multi-label Document Classification. The backbone of our model uses LayoutLM architecture.

The next step is defining the downstream task of Question Answering on the backbone provided by LayoutLM. We do this by modifying the LayoutLM architecture to predict two outputs: the start position and the end position.

Implementation Details

As discussed above, our model architecture is a modified version of LayoutLM to support the downstream task of Question Answering. Our implementation uses the pre-trained parameters provided by LayoutLM for initialization and fine-tuning.

Fig. 2 depicts the input data structure passed to the backbone network. The input data consists of Text and 2D Position Embeddings. The OCR transcriptions are provided to us along with the dataset.

Text Embeddings are similar to input embeddings you would use for a question answering task with BERT (e.g. SQUAD Dataset). For such a task, the token embeddings of question words and text from the document are packed together with a [SEP] or separator token in between. The Text Embeddings consist of the word, segment and position embeddings.

Additionally, we also have the 2D Position Embeddings to capture the document layout information. To further elaborate this point, each text token embedding has it’s corresponding coordinate value (x1,y1,x2,y2) represented in the 2D Position Embeddings. The coordinate values (x1,y1) and (x2,y2) are normalized bounding box coordinates from the OCR output provided in the dataset.

The Text and the 2D Position Embeddings are passed through our backbone LayoutLM model encoder to fetch output encodings.

The output encodings from the backbone transformer encoder architecture are passed to the downstream network. The downstream network for question answering predicts the span of the answer in terms of start and end index. Refer to this amazing blog by Chris McCormick on how to finetune BERT for the downstream task of Question Answering.

You can play around with a sample demo code at https://github.com/anisha2102/docvqa/blob/master/demo.ipynb.

Observations and Learnings

- Our model seemed to fail in some cases where a pre-trained BERT model on the SQUAD dataset worked well. On closer inspection, we noticed that the DocVQA dataset’s text does not have enough English language knowledge for the model to learn from. To address this, we added a subset of the SQUAD dataset along with the Docvqa dataset while training.

- The dataset has some questions based on images (similar to Text VQAdatasets)- e.g. Questions based on advertisement text. This would require including additional image embeddings of objects which is something we could not take up in the limited time frame.

- Although the model’s performance is above expectations on simple tables it can be quite unreliable in cases where questions are based on multi-column tables or hierarchical tables. e.g. What is the value of X row at Y column? We also tried to work on a separate module for table parsing, but the results were unsuccessful.

- Overall, this is a very interesting dataset to work on. It needs a lot of work on document understanding and ability to model layouts, images, text, graphs, figures, tables, etc.

Future Work

Our current implementation only relies on text and position information and does not include visual information. The future line of work should take this into account as adding the visual modality holds promise to improve the performance.

Resources

- DocVQA: A dataset for Document Visual Question Answering

- LayoutLM: Pre-training of Text and Layout for Document Image Understanding

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Question Answering with a Fine-Tuned BERT

- Workshop on Text and Documents in the Deep Learning Era

- Stanford’s Question Answering Dataset

- TextVQA Dataset

A big shoutout to my teammates at HyperVerge, Inc — Digvijay Singh, Vipul Gupta & Moinak Bhattacharya for their contribution and guidance.